People of color have a new enemy: techno-racism

Updated 1221 GMT (2021 HKT) May 9, 2021

(CNN)As protesters take to the streets to fight for racial equality in the United States, experts in digital technology are quietly tackling a lesser known but related injustice.

It's called techno-racism. And while you may not have heard of it, it's baked into some of the technology we encounter every day.

Digital technologies used by government agencies and private companies can unwittingly discriminate against people of color, making techno-racism a new and crucial part of the battle for civil rights, experts say.

"It's not just the physical streets. Black folks now have to fight the civil rights fight on the virtual streets, in those algorithmic streets, in those internet streets," says W. Kamau Bell, host of the CNN original series "United Shades of America." Bell explores this digitized form of racism in tonight's episode, which focuses on the role of race in science, tech and related fields.

We talked to some experts to gain a deeper understanding of what techno-racism is and what you can do about it.

What's techno-racism?

Techno-racism describes a phenomenon in which the racism experienced by people of color is encoded in the technical systems used in our everyday lives, says Mutale Nkonde, founder of AI For the People, a nonprofit that educates Black communities about artificial intelligence and social justice.

The term dates back at least to 2019, when a member of a Detroit civilian police commission used it to describe glitchy facial recognition systems that confused Black faces.

It gained new traction last year as the title of a webinar with Tendayi Achiume, a UN special rapporteur on racism, based on a report she wrote. Achiume and other experts argue that digital technologies can implicitly or explicitly exacerbate existing biases about race, ethnicity and national origin.

"Even when tech developers and users do not intend for tech to discriminate, it often does so anyway," Achiume told the UN Human Rights Council last year. "Technology is not neutral or objective. It is fundamentally shaped by the racial, ethnic, gender and other inequalities prevalent in society, and typically makes these inequalities worse."

Or in other words, as Bell says in Sunday's "United Shades" episode:

"Feed a bunch of racist data, collected from a long racist history ... and what you get is a racist system that treats the racism that's put into it as the truth."

So facial recognition systems are an example of it?

Yes, they can be.

Facial recognition technology uses software to identify people by matching images, such as faces in a surveillance video with mug shots in a database. It's a major resource for police departments searching for suspects.

But research has shown that some facial analysis algorithms misidentify Black people, an issue explored in the Netflix documentary, "Coded Bias." The American Civil Liberties Union describes facial surveillance "as the most dangerous of the many new technologies available to law enforcement" because it can be racially biased.

"Although the accuracy of facial recognition technology has increased dramatically in recent years, differences in performance exist for certain demographic groups," the United States Government Accountability Office wrote in a report to Congress last year. For example, federal testing found facial recognition technology generally performed better when applied to men with lighter skin and worse on darker-skinned women.

A false facial recognition match even sent a New Jersey man to jail for crimes he didn't commit. Nijeer Parks, who is Black, spent 11 days behind bars in 2019 after the technology mistakenly matched him with a fake ID left at a crime scene. The match was enough for prosecutors and a judge to sign off on a warrant for Parks' arrest.

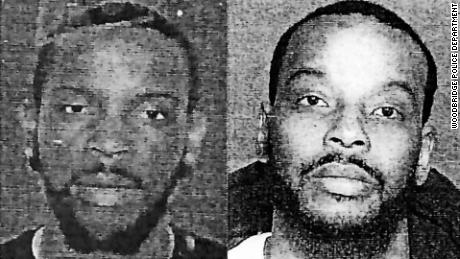

In a similar case, Detroit police in January 2020 arrested Robert Williams outside his suburban home based on a bad facial recognition match. Williams, who also is Black, spent 30 hours in jail before his name was cleared.

"I never thought I'd have to explain to my daughters why Daddy got arrested," Williams wrote in a column for The Washington Post. "How does one explain to two little girls that a computer got it wrong, but the police listened to it anyway?"

A study by the National Institute of Standards and Technology of over 100 facial recognition algorithms found that they falsely identified African American and Asian faces 10 to 100 times more than Caucasian faces.

Some police departments, government agencies and facial recognition vendors are now cautioning that facial recognition matches should be used only as investigative tools, not as evidence.

What are some other examples of techno-racism?

- Unemployment fraud systems

Some states are using facial recognition to reduce fraud when processing unemployment benefits. Applicants are asked to upload verification documentation, including a photo, and their images are matched against a database to verify their identity.

"This sounds great, but commercial facial recognition technologies used by Amazon, IBM and Microsoft have been found to be 40% inaccurate when identifying Black people," Nkonde said.

"So this will lead to Black people being more likely to be misidentified as attempting to commit fraud, potentially criminalizing them."

- Risk assessment tools

One such tool is the mortgage algorithms used by online lenders to determine rates for loan applicants.

These algorithms are still using flawed historical data from a period when Black people could not own property, Nkonde said.

In 2019, a study by UC Berkeley researchers found that mortgage algorithms show the same bias to Black and Latino borrowers as human loan officers. It found that bias costs people of color up to half a billion dollars more in interest every year than their White counterparts.

The passage of the Federal Fair Housing Act in 1968, which prohibited discrimination based on things such as race and national origin, has not stamped out racism in that industry, Nkonde said. The Department of Housing and Urban Development sued Facebook in 2019, accusing it of targeting housing ads on the platform to select audiences based on race, gender and politics.

Finance professor Adair Morse, co-author of the UC Berkeley study, said that discrimination in lending has shifted from human bias to algorithmic bias.

"Even if the people writing the algorithms intend to create a fair system, their programming is having a disparate impact on minority borrowers ŌĆö in other words, discriminating under the law," she said.

Are tech companies doing anything about it?

Last year, Amazon announced it would temporarily stop providing its facial recognition technology to police forces as part of a commitment to fighting systemic racism. So did Microsoft.

IBM also canceled its facial recognition programs and called for an urgent debate on whether the technology should be used in law enforcement.

Nonprofits such as AI for People are working with Black communities to educate them on how technologies are used in modern life. It produced a film with Amnesty International as part of the rights group's Ban the Scan campaign.

How else can we fight techno-racism?

When technology reflects biases in the real world, it leads to discrimination and unequal treatment in all areas of life. That includes employment, home ownership and criminal justice, among others.

One way to combat that is to train and hire more Black professionals in the American technology sector, Nkonde said.

She also said voters must demand that elected officials pass laws regulating the use of algorithmic technologies.

In 2019, federal lawmakers introduced the Algorithmic Accountability Act, which requires companies to review and fix computer algorithms that lead to inaccurate, unfair or discriminatory decisions.

"Computers are increasingly involved in the most important decisions affecting Americans' lives -- whether or not someone can buy a home, get a job or even go to jail," said Sen. Ron Wyden, one of the sponsors of the bill. "But instead of eliminating bias, too often these algorithms depend on biased assumptions or data that can actually reinforce discrimination against women and people of color."

It's time to be more skeptical about Silicon Valley and the supposed benefits of technology, said Christiaan van Veen, director of the Digital Welfare State and Human Rights Project, which was established at NYU law school to research digitalization's impact on the human rights of marginalized groups.

"It's good to remember that digital technologies and digital systems are still built with human involvement, not imposed on us by some nonhuman entity," he said. "Like with other expressions of racism, the fight against techno-racism will need to be multipronged and will likely never end."